Question: I know how AHP is working, but what I’m struggling with is, how to resolve the inconsistency (CR>0.1), when participants are done with their pairwise comparisons. It is time consuming if they go through the matrix and re-evaluate all their inputs. Do you have any suggestions?

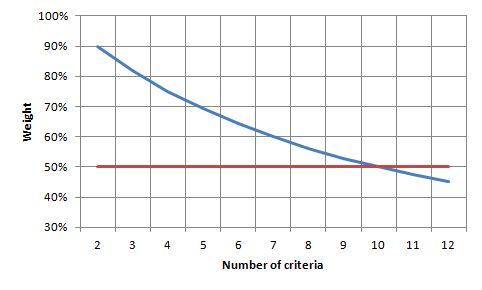

Answer: Yes, CR often is a problem. Also my projects show that, making the pair-wise comparisons, for many participant CR ends up to be higher than 0.1. Based on a sample of nearly 100 respondents in different AHP projects, the median value of CR is 16%, i.e. only half of the participants achieve a CR below 16% in my projects; 80-percentile is 36%. There seems also to be a tendency of increasing CR with the number of criteria, i.e. the median value significantly increases for more than 5 criteria.

From my experience, CR > 0.1 is not critical per se. I get reasonable weights for CR 0.15 or even higher (up to 0.3), depending on the number of criteria. The acceptance of a higher CR also depends on the kind of project (the specific decision problem), the out coming priorities and the required accuracy (what is the actual impact on the result due to minor changes of criteria weights?).

In my latest AHP excel template and AHP online software AHP-OS the three most inconsistent judgments will be highlighted. The ideal judgment (resulting in lowest inconsistency) is shown. This will help participants to adjust their judgments on the scale to make the answers more consistent.

The first measure to keep inconsistencies low is to stick to the Magical Number Seven, Plus or Minus Two, i.e. keep the number of criteria in a range between 5 and 9 max. It has to do with the human limits on our capacity for processing information, originally published by George A. Miller in 1956, and taken-up by Saaty and Ozdemir in a publication in 2003. Review your criteria selection, and try to cluster them in groups of 5 to 9, if you really need more.

Another possibility to improve consistency is to select the balanced-n scale instead of the standard AHP scale. In my sample, changing from standard AHP scale to balanced scale decreases the median from 16% to 6%. You might select different scales in my template.

Conclusion

-

Try to keep the number of criteria between 5 or 7, never use more than 9.

- Ask decision makers to adjust their judgments in direction of the most consistent input during the pair-wise comparisons for the highlighted three most inconsistent comparisons. A slight adjustment of intensities 1 or 2 up or down can sometimes help.

-

Accept answers with CR > 10%, practically up to 20%, depending on the nature and objective of your project.

-

Do the eigenvector calculation with the balanced scale instead of the AHP scale, and compare resulting priorities and consistency. This does not require to redo the pairwise comparisons.

References

George A. Miller, The Magical Number Seven, Plus or Minus Two: Some Limits on Our Capacity for Processing Information, The Psychological Review, 1956, vol. 63, pp. 81-97

Saaty, T.L. and Ozdemir, M.S. Why the Magic Number Seven Plus or Minus Two, Mathematical and Computer Modelling, 2003, vol. 38, pp. 233-244

Goepel, K.D., Comparison of Judgment Scales

of the Analytical Hierarchy Process - A New Approach, Preprint of an

article submitted for consideration in International Journal of

Information Technology and Decision Making © 2017 World Scientific

Publishing Company http://www.worldscientific.com/worldscinet/ijitdm

(2017)